By: Ming Sun

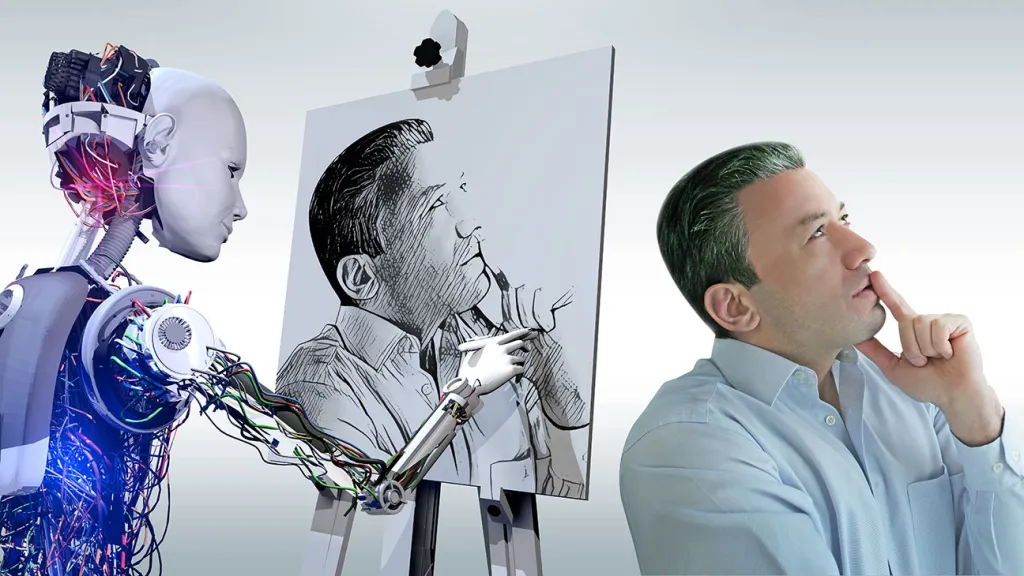

Crime cases involving children have long been the center of public attention since the inception of news outlets and social media. These horrific stories involve tortures, abductions, and even murders of children that will haunt their loved ones for years. More recently, however, as AI is becoming more available to the public, a group of content creators has taken up the responsibility of raising awareness about what happened to these children by giving them a voice created with AI to narrate their stories.

One such example is James Bulger, a British two-year-old in 1993 that was abducted and later tortured and killed by a pair of ten-year-olds. In multiple TikTok videos released recently, an image of James with a moving mouth generated by AI was shown while a childish voice narrated his death from his perspective. These videos had been described by Denise Fergus, James’ mother, as “disgusting” (The Washington Post). In an interview with The Mirror and The Washington Post, she said that she could find no words to describe the actions of these content creators (The Washington Post). According to The Mirror, Stuart Fergus, husband to Denise Fergus, had reached out to one such content creator, asking them to take down their video. He was met with this response: “We do not intend to offend anyone. We only do these videos to make sure incidents will never happen again to anyone. Please continue to support and share my page to spread awareness” (The Washington Post).

Many people agree with the Fergus family that this content is disturbing and could be insulting to the deceased and their loved ones. In fact, a TikTok spokesperson expressed that TikTok does not allow synthetic media about children and that efforts will be made to take down these contents (The Washington Post). But more videos just keep popping up, despite TikTok’s efforts, and this kind of content has even spread to other platforms like YouTube.

Felix M. Simon, a communication researcher at the Oxford Internet Institute, said that these videos “have the potential to re-traumatize the bereaved”, adding that “it is a widely held belief in many societies and cultures that the deceased should be treated with dignity and have certain rights”. According to Simon, the videos could potentially violate the right of the dead in that their personality and image is used without their permission and the deceased family may perceive the videos as insulting to their loved one (The Washington Post).

This kind of content will become more popular now that AI is an everyday tool for many. According to Hany Farid, a professor of digital forensics at the University of California at Berkeley, the best way to combat this content is to adapt to these changes, as they are inevitable in the face of advancing technology (The Washington Post).