By: Annabelle Ma

On Friday, seven leading A.I. companies in the U.S.—Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI—agreed to voluntary safeguards on the development of A.I. They pledged to manage the risks of the new tools as they compete over creating A.I.

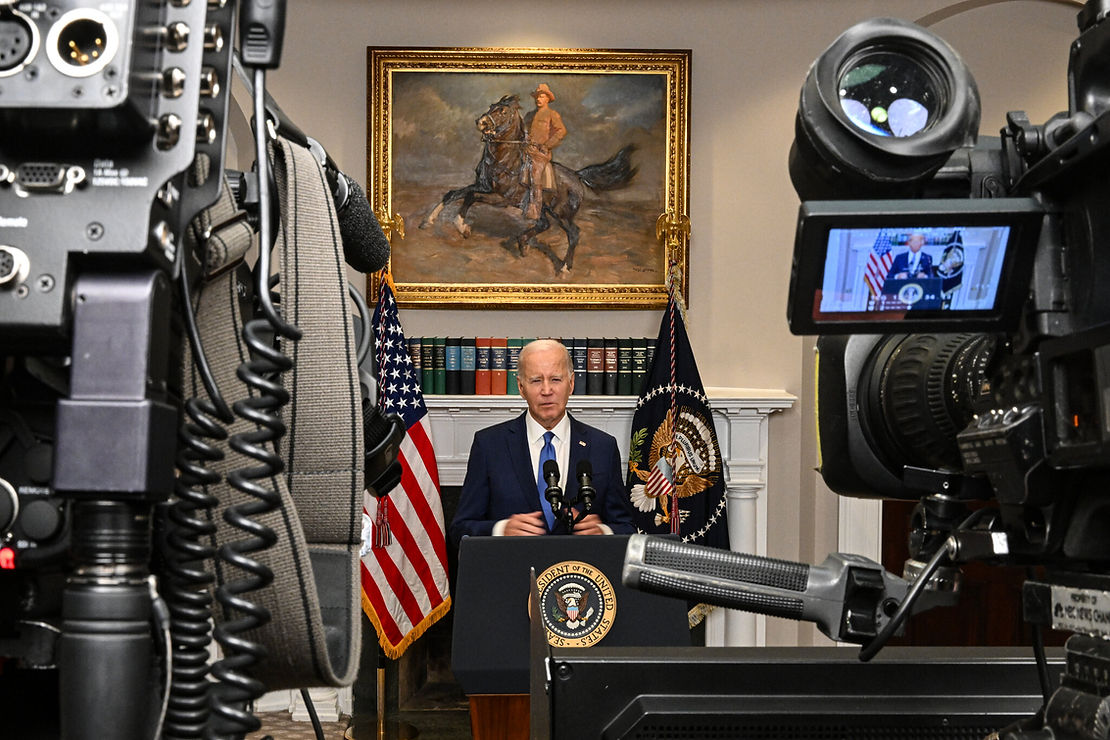

“This [A.I.] is a serious responsibility; we have to get it right,” Mr. Biden said at the Roosevelt Room at the White House, “And there’s enormous, enormous potential upside as well.”

Brad Smith, the president of Microsoft, voiced his approval for the voluntary safeguards.

“By moving quickly, the White House’s commitments create a foundation to help ensure the promise of A.I. stays ahead of its risks,” Mr. Smith said.

As A.I. becomes more sophisticated, it presents increasing risks. But currently we are only on one of the early steps, voluntary safeguards. Another major problem the U.S. faces is controlling the ability of China and other competitors to analyze the new A.I. programs, as well as the components used to develop them.

To safeguard against these issues, Friday’s agreement includes testing products for security risks and using watermarks to make sure consumers can figure out what is A.I. generated and what is not.

China is known to organize hack groups to surveil what the U.S. was planning. Even Microsoft, one of the most careful companies, is vulnerable to these attacks. Just last week, Microsoft encountered a Chinese government-organized hack on the private emails of American officials that stole a “private key” used to authenticate emails.

China and the U.S. are also competing against each other in A.I. technology. As the U.S. and its opponents, particularly China, face off in the race of development of A.I. technology, these hacks will become more and more common.

Source: https://www.nytimes.com/2023/07/21/us/politics/ai-regulation-biden.html